Yesterday, I was in a event, talking about Transactions, ACID, Isolation phaenomena, etc., under Postgresql.

In Postgresql or any other DBMS, Transactions might be running in sequence (serializable) or concurrently, depending on the Isolation level set (they are implemented by DBMS vendors in their own way).

The exagerated simple database table presented above, its two records of bank account holders and their balances, will be used for this article.

The focus of the discussion is about concurrent Transactions with READ COMMITED Isolation Level (default in Postgresql), but also demonstrating two transactions running, one SERIALIZABLE, and the other READ COMMITED.

READ COMMITED vs READ COMMITED

Two transactions were started. Observe the CLOCK icons on PgAdmin, indicating that a Transaction is in progress (on each Query Tool window)...

On the left: the balance of Steve Jobs was already updated to 1100

On the right: Steve Jobs still have 1000 of balance. Why?

Postgresql default Isolation level is READ COMMITED, which does not allow DIRTY READ phaenomena (data which is not commited yet by one Transaction, is visible to other Transactions).

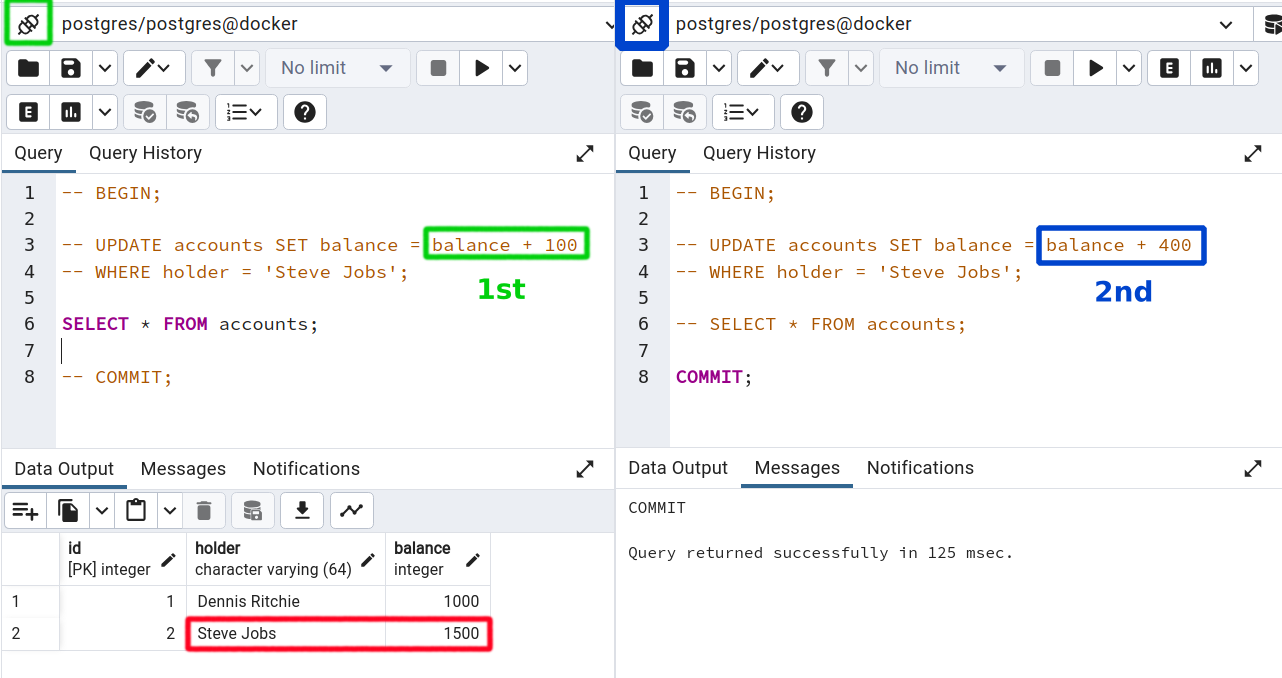

Adding More Money

On the left: the Transaction didn't finished, yet.

On the right: more money to Steve Jobs account, but the query doesn't finish...

The database table accounts is locked by the Transaction on the left. Only when this Transaction finishes (COMMIT), is when the Transaction on the right will add 400 bucks more on Steve Jobs acccount.

On the left: Transaction has finished and 100 bucks were deposited to Steve Jobs account

On the right: still in progress, but since the Transaction on the left finished (table released from lock), then the Transaction on the right sees a balance of 1100, adding 400 to it.

But why data commited from one Transaction is visible to another Transaction that is in progress?

On Isolation level of READ COMMITED, a Transaction can read data that was COMMITED. That being said, one phaenomena that might happen is what is called NON-REPEATABLE READ, where changes commited by one Transaction in a column, are VISIBLE to Transactions with Isolation Level of READ COMMITED.

If these Transactions read the data again, they might get a different value (NON-REPEATABLE), which might lead to some data inconsistencies during READ and WRITE operations of concurrent Transactions, of course, without affecting the ACID property of "Consistency" (transactions must respect database constraints - e.g. balance >= 0).

At the end, Transaction on the right has finished, adding 400 bucks more on Steve Jobs account, leaving the account with a balance of 1500 on the left, where no Transaction is in progress.

SERIALIZABLE vs READ COMMITED

Just as before, two Transactions in progress, being the first with Isolation Level SERIALIZABLE.

Records are still the same since the previous example.

Just as the specification, the PHANTOM READ phaenomena doesn't happen with a Transaction running with Isolation Level SERIAZABLE...

...even when the READ COMMITED Transaction finishes.

Doesn't matter: SERIAZABLE Transaction will see what's happening on it's snapshot of accounts table, and nothing else that is happening in other Transactions.

Some Reflections

Weaker isolation levels promote more performance (less overhead) at the cost of temporary data inconsistency.

It really depends on the Business Rules that govern the design of a Backend service, to determine which Isolation Level best suits. Maybe a PHANTOM READ or a NONREPETABLE READ might not cause any trouble in terms of UX for an application that is performing operations on the Stock Market (prices fluctuating from seconds to seconds), but maybe for an E-commerce solution, having a price changed all of sudden for a product that is current under a sell/buy transaction with an User, is not the best scenario: would be bad to start the acquisition of a product that costs U$ 100.00, and in the middle of the transaction (before paying), the price increases to U$ 125.00.

If you have any questions, I'd be glad to address them.